V-REP kinect + ROS + rgbdslam

In which I share negligible amounts of code resulting from surprisingly time consuming research to get it right, along with some gotchas encountered on the way. In the hope it will save someone else the time: here’s an example of how you hook up a kinect simulated by V-REP to rgbdslam.

Spoiler: you’ll need to modify the ros_plugin bundled with v-rep and use a short Lua script to set up publishers.

The issue

Publish the minimal set of ROS topics required to run rgbdslam off of Kinect simulated in V-REP.

As a minimum, rgbdslam requires:

- sensor_msgs/Image topic with RGB image

- sensor_msgs/CameraInfo topic with camera geometry data

- sensor_msgs/Image topic with depth image

In practice you’ll probably want to also send at least

The plugins

V-REP comes with two different ROS plugins: RosPlugin and RosInterface.

RosInterface is built to resemble the ROS API to a large degree: your Lua scripts work with subscribers and publishers directly, sending and receiving messages very much like you would in your C++ or Python ROS code.

RosPlugin, on the other hand, is not as generic, but – in the part that is relevant here – lets you set up fire-and-forget publishers that will stream data without any further work on Lua side. Which is awesome because it means no need to marshal the data to and from Lua, everything happens on C++ side.

V-REP documentation says to “make sure not to mix up” the two, which sounds like a warning about plugin clash but turns out to mean just that they are two separate things. It is perfectly okay to use them both at the same time, and it makes a lot of sense: use RosPlugin to stream high-bandwidth image data, and RosInterface to send or receive lower-bandwidth control messages that are easier to handle in Lua.

In this case you’ll only need RosPlugin.

The plugin tweak

RosPlugin provides almost everything necessary to pull it off: out of the box it can publish the RGB and camera geometry data, but falls short when it comes to depth information: it can stream it as vrep_common/VisionSensorDepthBuff, or sensor_msgs/PointCloud2, but rgbdslam really needs it as sensor_msgs/Image.

Apply this changeset to RosPlugin to add a depth Image publisher.

Build it, put the resulting dylib/so in the same directory with the

main vrep library, and remember to tell it how to find roscore.

You should see line like Plugin 'Ros': load succeeded. in output:

[@trurl ~] ROS_MASTER_URI=http://roshost:11311/ /Applications/vrep/vrep.app/Contents/MacOS/vrep

Using the default Lua library.

Loaded the video compression library.

[...]

Plugin 'Ros': loading...

Plugin 'Ros': load succeeded.

[...]

Initialization successful.The simulation

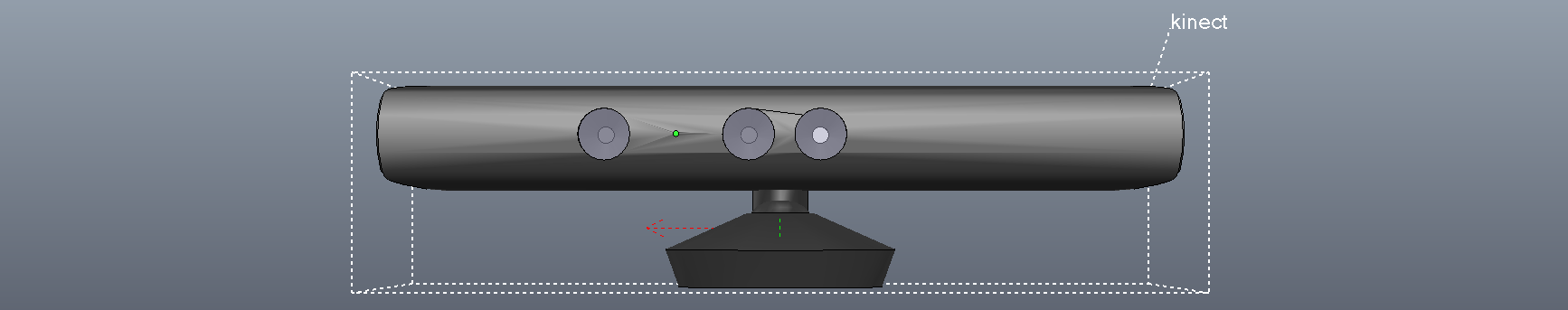

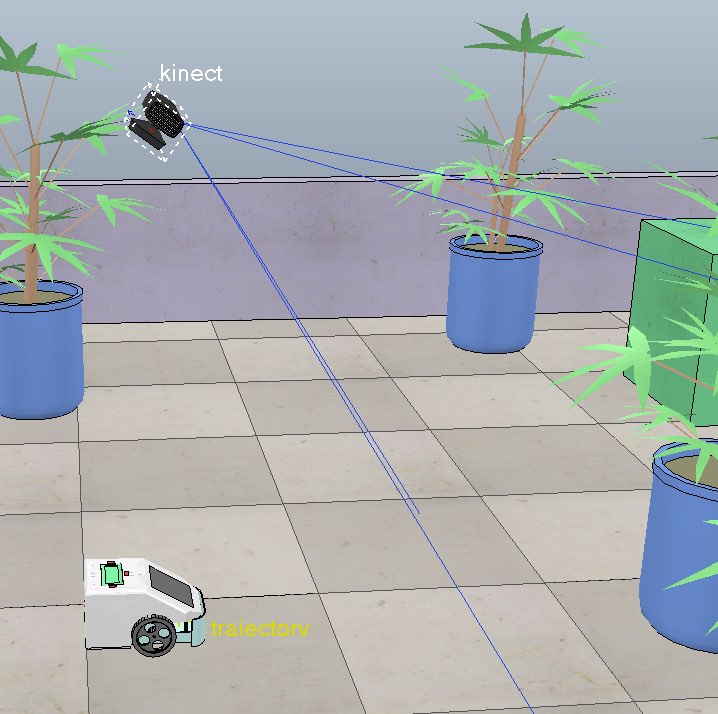

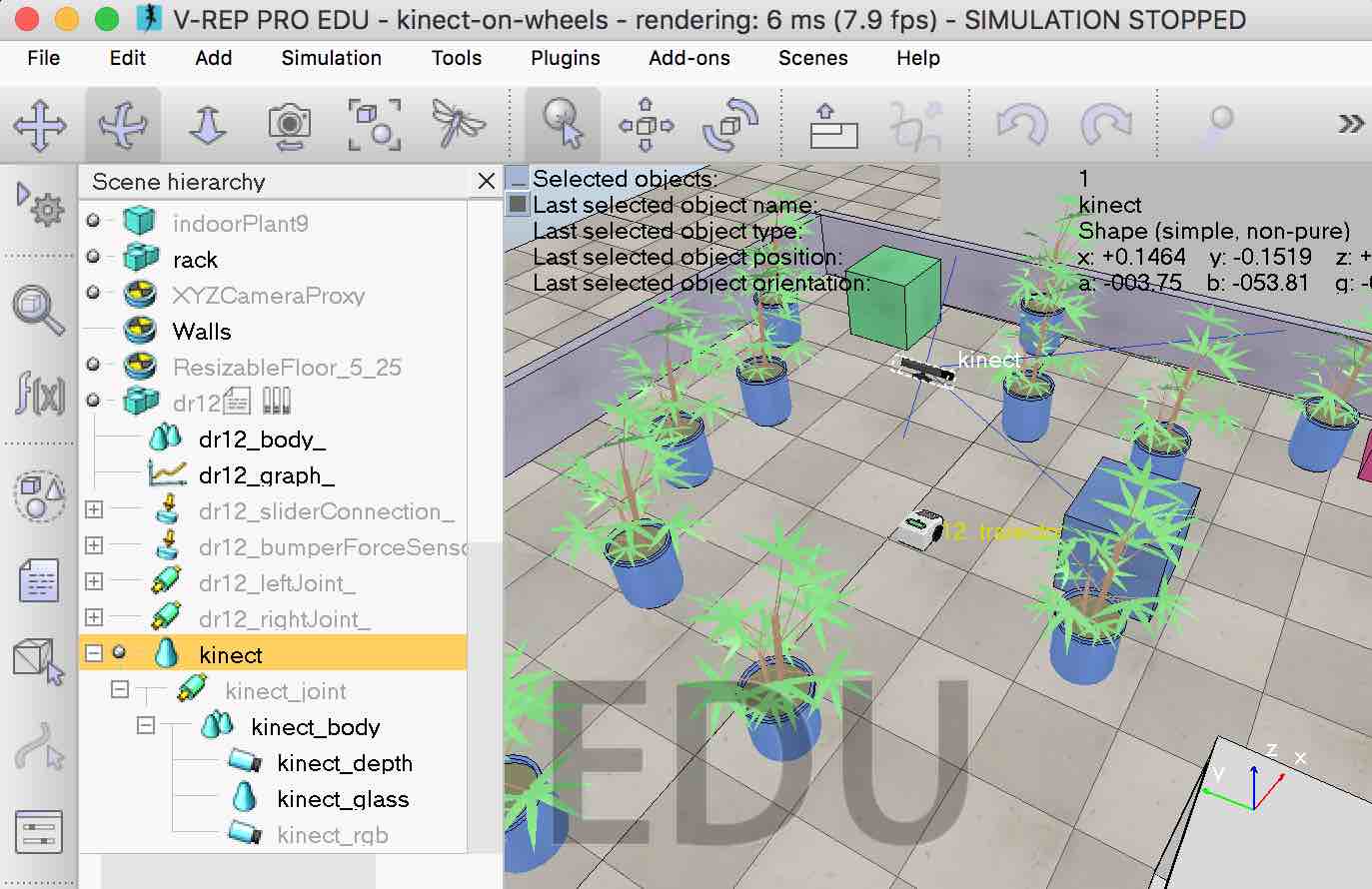

Being lazy, I just bolted Kinect on top of a DR12 robot from one of examples bundled with V-REP. Where by “bolted on top” I mean “have it hover 1m above the robot to get a better view.”

Throw in some objects around the robot so that SLAM has some details to pick up and that’s it.

And then, to configure publishers:

function setup_kinect_sensor_topics(prefix, colorCam, depthCam)

simExtROS_enablePublisher(prefix .. "rgb/image_rect_color", 1,

simros_strmcmd_get_vision_sensor_image,

colorCam, -1, "")

simExtROS_enablePublisher(prefix .. "rgb/camera_info", 1,

simros_strmcmd_get_vision_sensor_info,

colorCam, -1, "")

simExtROS_enablePublisher(prefix .. "depth/image_rect", 1,

simros_strmcmd_get_vision_sensor_depth_image,

depthCam, -1, "")

end

if (sim_call_type==sim_childscriptcall_initialization) then

setup_kinect_sensor_topics("/sim/kinect1/",

simGetObjectHandle("kinect_rgb"),

simGetObjectHandle("kinect_depth"))

endWhere kinect_rgb and kinect_depth are the names of simulated

sensors.

Rgbdslam configuration

Use the rgbdslam.launch bundled with rgbdslam, changing the

parameters that specify input topics:

<param name="config/camera_info_topic" value="/sim/kinect1/rgb/camera_info"/>

<param name="config/topic_image_mono" value="/sim/kinect1/rgb/image_rect_color"/>

<param name="config/topic_image_depth" value="/sim/kinect1/depth/image_rect"/>The result

The room in V-REP:

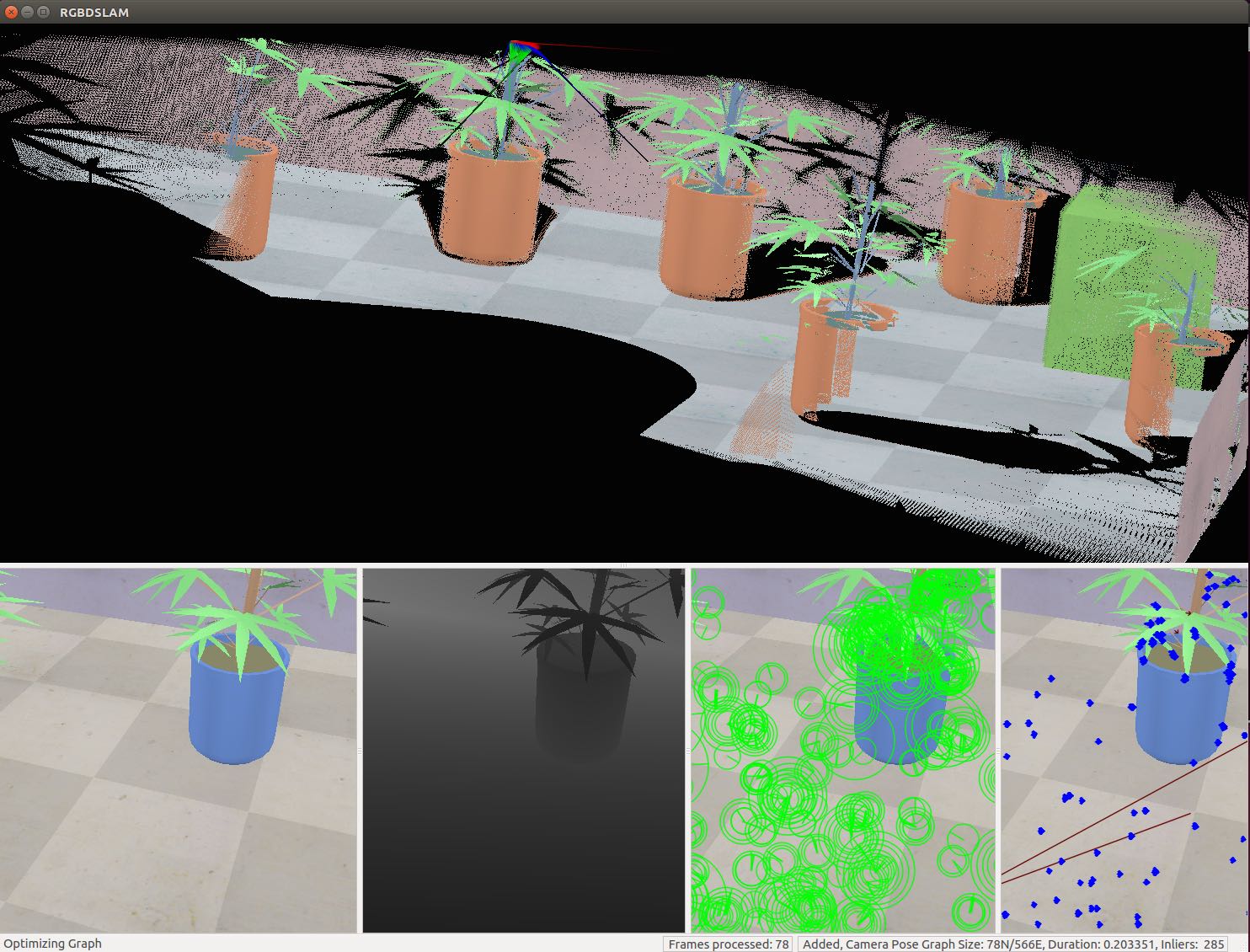

The same scene, as seen by rgbdslam after some rotation:

I have no idea why the point cloud reconstructed by rgbdslam swaps red and blue channels, since – as visible in the bottom row – input images have them the right way, but it’s not important as all I need from SLAM as output is odometry.